k8s环境下搭建rabbitmq集群

INFO

本文记录在k8s集群环境下采用StatefulSet方式搭建一个三节点的rabbitmq集群,数据挂载方式通过storage的方式挂在到nfs

前置说明:

① k8s版本:v1.28.2

② 这里数据持久化采用挂载nfs的形式,所以各个节点需要安装nfs客户端,否则创建provisioner的时候会提示连不上nfs。

以Ubuntu为例:

apt-get install nfs-common③ 案例将服务都放在了

middleware命名空间里了,可以结合自己的情况替换:全局替换:sed -i 's/middleware/[自己的namespace]/g' ./*

1、NFS基础支持

1.1、创建serviceAccount

---

kind: ServiceAccount

apiVersion: v1

metadata:

namespace: middleware

name: nfs-client-provisioner

1.2、创建rbac相关资源

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: middleware

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: middleware

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: middleware

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: middleware

name: leader-locking-nfs-client-provisioner

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: middleware

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: middleware

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

通过上述配置,相当于就给名称为

nfs-client-provisioner的serviceAccount赋予了操作集群资源(pvc)的权限

1.3、创建provisioner

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

namespace: middleware

name: sx-managed-nfs-storage

provisioner: sx-nfs-client-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false"

---

kind: Deployment

apiVersion: apps/v1

metadata:

namespace: middleware

name: sx-nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

namespace: middleware

labels:

app: nfs-client-provisioner

spec:

serviceAccount: nfs-client-provisioner

containers:

- name: sx-nfs-client-provisioner

imagePullPolicy: IfNotPresent

#image: registry.cn-hangzhou.aliyuncs.com/xhsx/nfs-client-provisioner:latest

image: registry.cn-hangzhou.aliyuncs.com/xhsx/nfs-subdir-external-provisioner:v4.0.0

volumeMounts:

- name: sx-nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: sx-nfs-client-provisioner

- name: NFS_SERVER

value: 192.168.0.101

- name: NFS_PATH

value: /nfs_win/k8s

volumes:

- name: sx-nfs-client-root

nfs:

server: 192.168.0.101

path: /nfs_win/k8s

这里注意使用的镜像,我最开始用的被注释掉的那个镜像,结果pv一直创建失败,提示:waiting for a volume to be created, either by external provisioner "xxxx"

网上有让改

/etc/kubernetes/manifests/kube-apiserver.yaml的,但这跟k8s的版本有关系,1.26以上的版本直接用文章上面的镜像就行了

2、搭建rabbitmq集群

2.1、创建配置

apiVersion: v1

kind: ConfigMap

metadata:

name: rmq-cluster-config

namespace: middleware

labels:

addonmanager.kubernetes.io/mode: Reconcile

data:

enabled_plugins: |

[rabbitmq_management,rabbitmq_peer_discovery_k8s].

rabbitmq.conf: |

loopback_users.guest = false

## Clustering

cluster_formation.peer_discovery_backend = rabbit_peer_discovery_k8s

cluster_formation.k8s.host = kubernetes.default.svc.cluster.local

cluster_formation.k8s.address_type = hostname

#################################################

# middleware is rabbitmq-cluster's namespace#

#################################################

cluster_formation.k8s.hostname_suffix = .rmq-cluster.middleware.svc.cluster.local

cluster_formation.node_cleanup.interval = 10

cluster_formation.node_cleanup.only_log_warning = true

cluster_partition_handling = autoheal

## queue master locator

queue_master_locator=min-masters

2.2、创建secret

apiVersion: v1

kind: Secret

metadata:

name: rmq-cluster-secret

namespace: middleware

stringData:

cookie: ERLANG_COOKIE

username: admin

password: admin@123

type: Opaque

注意:理论上这个账号和密码就是后面登录控制台的账号和密码,但是实践发现最后默认的账号还是guest/guest,也就是说这个设置没生效

2.3、创建svc

apiVersion: v1

kind: Service

metadata:

name: rmq-cluster

namespace: middleware

labels:

app: rmq-cluster

spec:

selector:

app: rmq-cluster

clusterIP: 10.96.0.11 #指定clusterIP,方便使用

ports:

- name: http

port: 15672

protocol: TCP

targetPort: 15672

- name: amqp

port: 5672

protocol: TCP

targetPort: 5672

type: ClusterIP

2.4、创建pod

这里用StatefulSet

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: rmq-cluster

namespace: middleware

labels:

app: rmq-cluster

spec:

replicas: 3

selector:

matchLabels:

app: rmq-cluster

serviceName: rmq-cluster

template:

metadata:

labels:

app: rmq-cluster

spec:

serviceAccountName: nfs-client-provisioner

terminationGracePeriodSeconds: 30

containers:

- name: rabbitmq

image: registry.cn-hangzhou.aliyuncs.com/xhsx/rabbitmq:3.7-management

imagePullPolicy: IfNotPresent

ports:

- containerPort: 15672

name: http

protocol: TCP

- containerPort: 5672

name: amqp

protocol: TCP

command:

- sh

args:

- -c

- cp -v /etc/rabbitmq/rabbitmq.conf ${RABBITMQ_CONFIG_FILE}; exec docker-entrypoint.sh

rabbitmq-server

env:

- name: RABBITMQ_DEFAULT_USER

valueFrom:

secretKeyRef:

key: username

name: rmq-cluster-secret

- name: RABBITMQ_DEFAULT_PASS

valueFrom:

secretKeyRef:

key: password

name: rmq-cluster-secret

- name: RABBITMQ_ERLANG_COOKIE

valueFrom:

secretKeyRef:

key: cookie

name: rmq-cluster-secret

- name: K8S_SERVICE_NAME

value: rmq-cluster

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: RABBITMQ_USE_LONGNAME

value: "true"

- name: RABBITMQ_NODENAME

value: rabbit@$(POD_NAME).rmq-cluster.$(POD_NAMESPACE).svc.cluster.local

- name: RABBITMQ_CONFIG_FILE

value: /var/lib/rabbitmq/rabbitmq.conf

livenessProbe:

exec:

command:

- rabbitmqctl

- status

initialDelaySeconds: 30

timeoutSeconds: 10

readinessProbe:

exec:

command:

- rabbitmqctl

- status

initialDelaySeconds: 10

timeoutSeconds: 10

volumeMounts:

- name: config-volume

mountPath: /etc/rabbitmq

readOnly: false

- name: rabbitmq-storage

mountPath: /var/lib/rabbitmq

readOnly: false

volumes:

- name: config-volume

configMap:

items:

- key: rabbitmq.conf

path: rabbitmq.conf

- key: enabled_plugins

path: enabled_plugins

name: rmq-cluster-config

- name: rabbitmq-storage

persistentVolumeClaim:

claimName: rabbitmq-cluster-storage

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: rabbitmq-cluster-storage

namespace: middleware

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

storageClassName: sx-managed-nfs-storage

2.5、创建ingress网络

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

namespace: middleware

name: rabbitmq-ingress

spec:

ingressClassName: nginx

rules:

- host: rabbitmq.shengxiao.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: rmq-cluster

port:

number: 15672

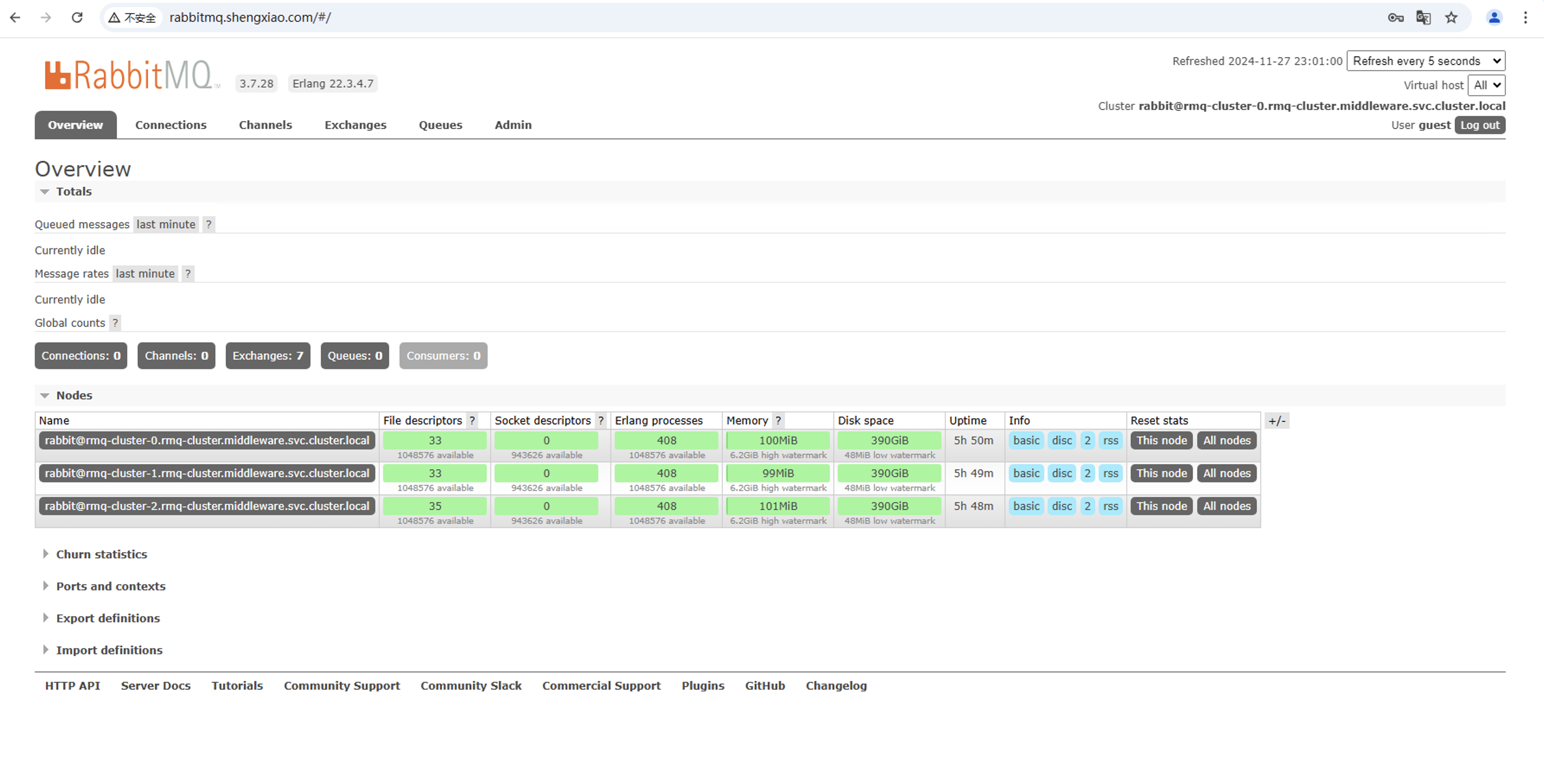

3、控制台访问

3.1、域名解析

将上面创建ingress网络的域名解析到ingress-controller所在的节点IP,我这里是瞎写的域名,所以是直接改的/etc/hosts文件

3.2、控制台

地址:http://rabbitmq.shengxiao.com

输入默认账号:guest/guest即可进入控制台